Apple uses child pornography to normalise tech espionage

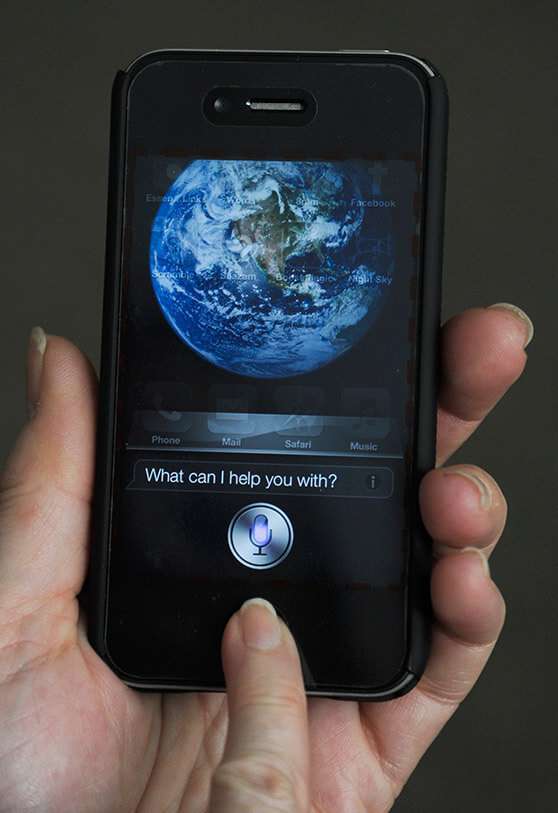

"Apple suspends the programme through which third parties listened to your conversations with Siri", read a headline in August 2019. The news clarified that company workers acknowledged in statements to The Guardian that they had come to listen to conversations about sex or drugs. Months later came the campaign that transformed the bitten apple logo into a padlock and assured that "what happens on your iPhone, stays on your iPhone".

In August 2021 Apple has opened a dangerous can of worms in its latest press release. A 12-page document in which it explains that from now on Apple will scan the images that users store in iCloud. There are also two other measures it wants to develop, one is the ability to warn of "sensitive" material in Messages and the other is the option for the device to warn when Siri or Search offer unsafe solutions or users search for information about child pornography.

In the end, Apple's idea may be a good one, and even necessary in the society we live in. We all know the stories of monsters abusing children. From time to time the police dismantle mafias that traffic in images of minors. And out of that innocence we find wolves on WhatsApp asking for photos of minors to then commit blackmail. Well, all this unbearable reality clashes head-on with the security we deserve to have in our technological environment.

There are two positions regarding Apple's intention. One is to feel free and secure because there is nothing to hide, even to have something sensitive but legal. Not to be afraid of having your mobile phone photos stolen because there is nothing to be ashamed of and nothing to ruin a life with. Paedophiles or child pornography mafias are very careful not to save those images on any server. Apple goes straight after the sick people who take these pictures and traffic in them. The wolves in sheep's clothing who use their position, their employment or the trust of others to obtain material. The case summaries of the most shady paedophile cases tell what kind of profiles have access to take these pictures. It's a little nerve-wracking to know certain things, and Apple has decided to get into those phones to catch the bad guys.

There are two positions regarding Apple's intention. One is to feel free and secure because there is nothing to hide, even to have something sensitive but legal. Not to be afraid of having your mobile phone photos stolen because there is nothing to be ashamed of and nothing to ruin a life with. Paedophiles or child pornography mafias are very careful not to save those images on any server. Apple goes straight after the sick people who take these pictures and traffic in them. The wolves in sheep's clothing who use their position, their employment or the trust of others to obtain material. The case summaries of the most shady paedophile cases tell what kind of profiles have access to take these pictures. It's a little nerve-wracking to know certain things, and Apple has decided to get into those phones to catch the bad guys.

But Cupertino is going to take an action that puts its customers at risk. Those who are told that their mobiles, computers or tablets are the most secure on the market. To these users it tries to explain that they have designed a cutting-edge technology that is going to convert their photos into numbers and that it is going to compare these numbers with those of images of child pornography and that if they coincide they are going to report them to the police. Apple takes its role as a private detective too seriously. It is putting its operating system's trustworthiness at risk in order to obtain a result that is expected to be very small.

All that Apple explains in its pdf is that it will scan the photos of users who have iCloud Photos activated. In other words, there is no option to disable "fingerprinting", as Apple calls it. In the case of Messages, they say that "everything stays in the family" so Apple will monitor the mobiles of minors belonging to a family account. In this case it is an "opt-in" option, only activated if the user indicates it. Parents will receive a warning when their children send or receive compromising photos, but will not notify the authorities. In Spain, they won't have to do much work in this area because the obsession with WhatsApp means that text messaging between Apple devices is hardly used, even though it is free and, so far, more secure.

Apple is not going to solve the scourge of child pornography with this measure. That will be done by the authorities with the great work they do every day. Disarming sick minds and locking up these monsters who live a seemingly normal life. Apple's campaign has a lot of marketing in it. A lot of form and little substance. If they manage to pinpoint a single abuser, that's good news, but if along the way they pinpoint a few innocent people, because their AI has failed or they lose that image of security, it won't have been worth it because that offender would have been brought down thanks to police work.

Apple did not want to unlock an iPhone 4 to get more information about the Boston terrorists. Now it claims to be able to break into mobile phones, review photos without looking at the content and detect child pornography. It is a minefield that will end up in court because it is unpopular to say that looking for such images today cannot justify attacking free speech tomorrow.