The use of artificial intelligence in the military field

The use of artificial intelligence in our society has experienced exponential growth in all areas, both in political and social life, as well as in the military field. In the latter, it has been demonstrated that artificial intelligence is capable of optimising and automating many military missions. However, not everything is positive, as the same efficiency that is achieved through the use of algorithms can be easily fooled and hacked.

To get into the subject, it is necessary to explain how this type of intelligence works. Regardless of the field in which Artificial Intelligence (AI) is to be applied, the execution of its implementation follows the same process: AI combines large amounts of data with fast processing and intelligent algorithms. This combination allows the software to learn automatically and automate processes. In this sense, its main difference with a computer programme is that it does not receive orders to obtain a result, so, by replicating a cognitive model, it is more similar to human action.

In the military field, the information available is extensive and can help in decision-making. It has also been shown that software systems implemented in this field help to increase the safety of soldiers, are effective in search and rescue operations, and are capable of preventing enemy attacks, among many other operations.

As a result, AI has not taken long to establish itself in broad social sectors and more specifically in the military, where it is already considered a "great asset". In the United States, for example, several areas of its armed forces have AI in information analysis, decision-making, vehicle autonomy, logistics and weaponry. In this regard, the military employs this type of cutting-edge technology from the most routine tasks, as well as in the use of drones.

The US programmes Skyborg, NTS-3 and Golden Horde, used by the air force, stand out in this regard. In the case of Skyborg, the programme seeks to integrate artificial intelligence systems into autonomous unmanned aerial vehicles so that they are able to operate on their own, without anyone in command. They are also investigating whether they could be integrated with a formation of operational manned and unmanned aircraft.

In the case of NTS-3, it is capable of developing new GPS receivers that incorporate signals to communicate with military units, an essential battlefield exercise. The Golden Horde, on the other hand, seeks to configure a weapons system that would be inserted into bombs or guided missiles, so that they would be able to work in concert with other weapons. Thus, a set of weapons would have the ability to share certain data, such as the location of enemies or their defences.

In this way, the weapons could react in real time, reduce enemy defences and share combat tasks, proving highly effective in this mission.

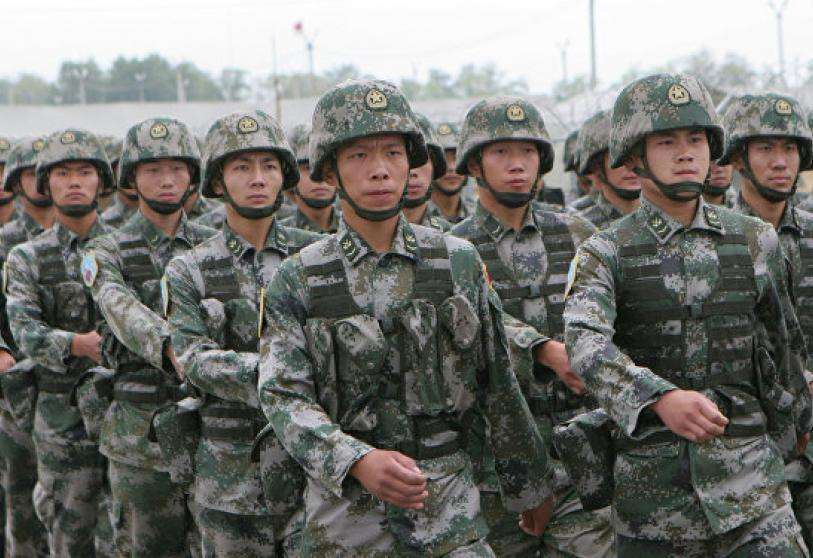

Another example is China, which in 2017 already announced its strategy with this type of technology, stating that it would be used to "improve national competitiveness and protect national security". In this regard, in China, the use of AI increased during the COVID-19 pandemic in order to identify people who were infected by the virus.

Putin also said in a statement that "whoever becomes the leader of the AI sphere will dominate the world".

Despite its effectiveness and multiple advantages, it is notorious and at the same time a weakness that anyone who knows how the algorithm works can disable it or even turn it against its owners. This was demonstrated by a Chinese lab in March 2019 who was able to fool the sophisticated AI algorithms of Tesla cars. In the process, they managed to get the cars to make mistakes in autonomous driving, which set off alarm bells in the United States to such an extent that the then secretary of security, General Mattis, claimed that China was outpacing them in the development of AI.

Added to this is the moral dilemma of military weapons not being subject to human orders. In this regard, the European Union has already taken a position on the matter by issuing a guideline stating that "lethal autonomous systems must be subject to human control".